Large Language Models (LLMs) bring a pivotal shift to the business technology landscape. Offering high levels of efficacy and insight into data processing, this groundbreaking technology is becoming indispensable in the enterprise realm.

LLMs have subtly transformed the concept of search for years but have come into the limelight with breakthroughs like ChatGPT, making conversations like “Will AI replace humans” common.

ChatGPT indeed has gained widespread attention for its impressive and sometimes mind-bending results. However, it is just one of the many powerful AI models also known as LLMs that deploy machine learning to analyze and comprehend human language.

From being a foundational tool to modern-day enterprises, the ability of this technology is beyond simply analyzing and understanding diverse and vast data sets. Yes! they are not just a technological breakthrough in the tech landscape, but a strategic solution fostering enterprises to unlock new opportunities. However, like two sides of a coin, these modern technologies continue to advance but bring to the table a set of both challenges and opportunities.

So, what is the best way for enterprises to implement and capitalize on this technology? This is exactly what our blog will be focused on. By demystifying the challenges and solutions around integrating LLMs into the enterprise, we aim to share valuable insights to help enterprises make the most of this mind-blowing technology.

What are LLMs? Read our blog on “Applications of LLMs in Business” to decode the concept of LLMs, and learn why businesses should deploy them, and their applications in the business landscape.

Do you know how many companies plan to integrate LLMs in 2024? Currently, there are 300 million companies in the world. If we go by numbers, according to a report by iOPEX nearly 67% already use Gen AI products and depend on LLMs to work with human language and produce content. Gartner’s survey revealed that 55% of corporations were either piloting or releasing LLM projects. This number is expected to grow rapidly. These numbers indeed are humungous and show extensive potential.

With rapid development and innovation, enterprises are increasingly showing interest in integrating LLMs into their business operations for various reasons.

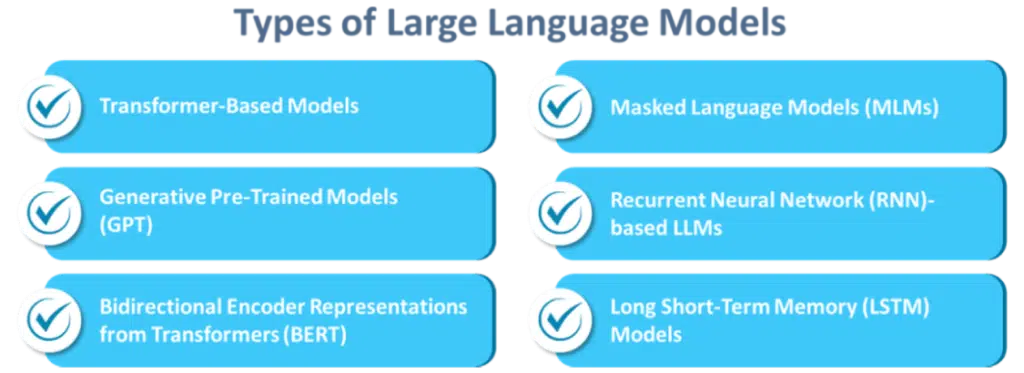

There are different types of LLMs developed to address a specific set of challenges in NLP. Each type offers unique strengths and polishes various aspects of text comprehension, text generation, and interaction. By deploying these diversely built models, enterprises can effectively deal with a range of tasks. So, let’s find out what these types are and what they have to offer.

Transformers or transformer-based models are revolutionizing the way computers interpret natural human language. Unlike earlier models that analyze words sequentially, Transformers can evaluate an entire sentence simultaneously, enhancing their ability to grasp linguistic subtleties. They were introduced in the seminal paper “Attention Is All You Need” and were designed to tackle tasks that convert one sequence into another, such as translating speech or transforming text-to-speech. This innovative approach has made them highly eligible and effective for a range of language processing applications.

GPT models are built on the transformer architecture and are a type of LLM extensively pre-trained on large data sets. The pre-training phase in this case involves getting trained on the structure and tones of language, including aspects such as semantics, grammar, and context. These models are great at tasks that include text completion, creative writing, and conversation.

Introduced by Google in 2018, Bidirectional Encoder Representations from Transformers or BERT is a machine learning framework for NLP that makes use of surrounding text to get the context right and make sense of unclear language.

BERT is a language model that explores the context of a given word in both forward and backward directions. It uses a technique known as masked language modeling, where a few words in a sentence are hidden or masked and the model is trained to predict these words by analyzing the text around it.

As the name suggests, MLMs or Masked Language Models are deployed in NLP tasks for training language models. Here certain words and tokens in each input are hidden or masked randomly and the model is trained to envisage these elements with the help of surrounding text. These models fall under self-supervised learning and are engineered to produce text without the need for specific labels or annotations.

RNN is a type of deep neural network engineered to manage sequential or time series data to make predictions or draw conclusions based on sequential inputs. RNNs are often used to share predictions on events such as daily flood alerts based on historical and meteorological data. Additionally, RNNs are extensively used for resolving temporal issues including language, NLP, speech recognition and more. These core capabilities have made RNNs essential for applications such as Google Translate, Siri, and other voice searches.

LSTM is a Recurrent Neural Network (RNN) architecture that addresses the challenges of processing sequential data under long-term dependencies. Unlike RNNs, LSTMs are more efficient with retrieving and utilizing information from the distant past. This quality of the model makes it appropriate for analyzing time series data, NLP, and more.

Selecting the most suitable LLM model is a strategic decision that can profoundly impact the ability of your enterprise to make the most of AI. By understanding and exploring in detail what these models have to offer, you can refine the selection for the best possible outcome.

50% of enterprises will abandon their own LLMs. Yes! According to a report by Gartner, around 50% of enterprises will abandon homegrown LLMs by 2028 due to factors like cost, increasing technical debt, and complexity. Gartner also predicts that by 2027, there will be a rise in the use of Gen AI tools to explain legacy business apps and create replacements, leading to reduced cost of modernization by 70%.

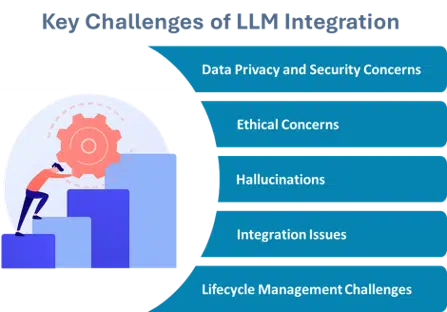

LLMs indeed are at the forefront of innovation and are poised to give enterprises a breakthrough. However, at the same time, it is essential to have a clear idea about what challenges come along with the integration of LLMs and how these can be resolved.

| Context | Challenge | Solution |

| Data Privacy and Security Concerns | Integrating LLMs in the enterprise setup is becoming a game-changer. Even though this high-tech innovation brings a range of benefits to the table, it poses a few challenges. One of the most prominent challenges while integrating LLMs in enterprises is data privacy and security concerns. As LLMs deal with humungous amounts of data which may include sensitive information that can attract cyberattacks, businesses need to take mindful steps around tackling this challenge. | To mitigate this challenge, enterprises must exercise a comprehensive approach that includes data hygiene, access controls, continuous monitoring, and policy enforcement. Data hygiene is the foundation for laying clear guidelines related to the handling of LLM training data. Mindful access controls must be implemented to restrict access to LLM training data to authorized personnel. Continuous monitoring must be implemented to detect and respond to any unusual activity. Policy enforcement is essential for laying a foundation of clear guidelines for handling of LLM training data. |

| Ethical Concerns | Large Language Models come with a set of ethical challenges that most often are associated with their linguistic capabilities. A major concern amongst these is bias, where the pre-trained model can unintentionally recognize and mirror the biases already present in the data used for training. This inconsistency can lead to reinforcement of discrimination, marginalization, and even stereotypes. | To tackle this challenge effectively, enterprises must strike the right balance. It is a team effort, and this stage can only be achieved through collaborative efforts by AI researchers, policymakers, developers, and other stakeholders involved in the decision-making process. Additionally, to ensure LLMs adhere to ethical standards, enterprises must have a proactive plan to identify and mitigate biases, promote transparency, and prioritize responsible AI development practices. |

| Hallucinations | LLM hallucinations can be one of the most pressing issues faced by businesses while integrating LLMs. Technically, hallucinations in the realm of LLMs are a phenomenon of generating outputs that can either be factually incorrect or contextually inappropriate and can lead to misleading, false information undermining trust in AI systems. It is recommended to actively reduce LLM hallucinations to avoid real-world consequences. | As effective steps towards tackling the issue of hallucinations, enterprises must go for a multifaceted approach that encompasses everything right from model pre-processing to ongoing maintenance activities. Strategies like advanced prompting techniques, retrieval-augmented generation or RAG, and Few-shot and zero-shot learning along with fine-tuning LLMs can prove helpful. |

| Integration Issues | Integrating LLMs into the existing IT environment can be overwhelming. These tech-driven models often demand extensive data inputs to perform. This means they must be effortlessly integrated with the enterprise’s existing infrastructure and database. Additionally, as the quality of output largely depends on the quality of input data, building a strong foundation is critical to successful deployments of LLMs. | The solution is to use emerging APIs and data formatting frameworks that are specifically designed to smooth the integration. Middleware and data transformation tools play a crucial role in connecting LLMs with existing systems, enabling smooth data exchange and communication. By integrating these technologies, organizations can ensure that LLMs interact effectively with their infrastructure, leading to more valuable and actionable outputs. |

| Lifecycle Management Challenges | As LLMs continue to scale, managing their development and direction and aligning them with the desired output can be daunting. The complexities of these vast systems can be another challenging element as it can create several instances for the model to drift away from the core expectation. This is where it is mission-critical for businesses to ensure the model stays relevant and performs as expected over time. | To handle this challenge most positively, businesses must build a strong governance framework and implement continuous monitoring routines. This exercise should include regular performance assessment, retraining of data, and maintaining a stringent version control to keep the model in sync with business goals. Engaging cross-functional teams for oversight and using automated tools for monitoring can also help ensure the LLM stays on track. |

Businesses need to keep in mind that all LLMs are not the same nor are the use cases. Every case requires a different model to achieve the desired outcome. This is why it is essential to take stock of the challenges and the relevant solutions that can streamline the implementation of LLMs and deploy them for the best possible outcomes.

Enterprises must be prepared to embrace LLMs as they demand continuous learning and adaptation. And as AI evolves, so will the opportunities.

Calsoft being a technology-first company, strongly believes in staying ahead of the trends and continuous refinement of approaches and solutions. Our AI and ML solutions are built to transform the present and future of businesses by deploying solutions that can solve the biggest business challenges.