AI and ML are no longer just futuristic ideas – they’re now reshaping how businesses compete and operate. Recent data from McKinsey highlights that these technologies could increase corporate profits by $4.4 trillion a year, revealing their potential to drive significant growth for your business. These technologies are revolutionizing a wide range of industries ranging from cybersecurity to healthcare and the journey has just begun as they are all poised to grow from strength to strength- evolving and integrating amongst themselves.

Artificial Intelligence, or simply AI, is a broad field that seeks to create systems that can perform tasks that were previously only possible with the involvement of human intelligence. These activities include, but are not limited to learning, reasoning, problem-solving, and perception. AI systems are typically capable of working with any type of data, whether it is unstructured, semi-structured, or unstructured. This helps the AI system in analyzing and data processing across a variety of formats.

ML, or Machine Learning, on the other hand, is a subtopic within the larger AI technology branch. ML focuses on letting systems to learn from provided data and improve their functional capabilities without the need for further specialized programming. To achieve this, ML employs advanced algorithms that can identify patterns and trends that are contained within the data provided. Such an operating mechanism facilitates tasks like pattern recognition, predictive modeling, and classification. Unlike AI, ML needs high-quality structured and semi-structured data to ensure optimal performance.

AI and ML share a foundational relationship. All ML technologies fall under the larger AI umbrella, but not all AI techs must necessarily be ML-oriented. AI is the latest disruptor in tech, and it’s poised to be the biggest invention since the internet. So, let’s try to assess why AI/ML is so hyped!

Through the implementation of AI/ML strategies, businesses like yours can address issues hindering technological integration. Suppose you have a well-defined AI/ML implementation strategy. In that case, it serves to act as a roadmap, allowing you to leverage the tech to achieve your business objectives consistent with larger organizational goals. This blog helps you in getting a comprehensive overview of AI/ML strategies!

By employing such strategies, you can optimize processes, effectively manage risks, and enhance operational efficiency. Proper implementation of AI and related technologies has a tangible effect on key business processes like productivity and improved decision-making. Through the automation of repetitive tasks, employees can instead focus on more strategic initiatives.

By ensuring a structured AI/ML implementation plan, you can integrate them seamlessly with their existing systems with minimal disruptions and maximum productivity. You need to thoughtfully consider how AI systems can work in tandem with your existing workflows to achieve an effective and coherent environment for your operations.

Ethics also plays an important role in AI/ML implementation strategies. Responsibility is key, and your organization must develop AI systems that bear the characteristics of transparency, fairness, and accountability. It would help significantly in creating trust amongst stakeholders and minimize risks arising from misuse of AI tech or bias in the same.

The blog details of all the above-mentioned issues. Let’s start with basic AI/ML/DL concepts!

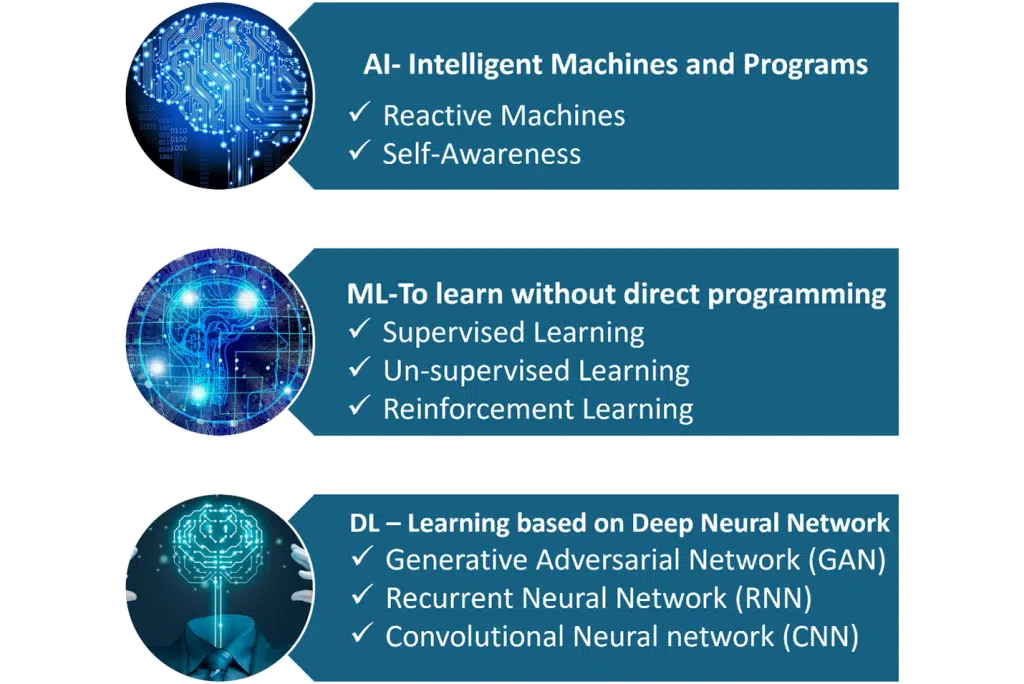

It would be erroneous to view AI, ML and DL separately as they are fields that are closely interrelated to one another, both conceptually and practically. And all three are all set to completely change the way human beings interact with technology. Let’s examine the three, one by one!

AI technology, as we mentioned before, strives to create artificial technological systems that can mimic cognitive functions previously limited only to human beings. By employing a wide range of subset technologies, the field enables machines to perform tasks that formerly only humans could carry out.

AI enables machines to understand natural human language, recognize and identify patterns, and make data-driven decisions. The applications of AI technologies spread over a vast range of industries. It is already causing significant disruptions in fields like automotive, healthcare, and finance, amongst others.

ML, on the other hand, is a subset of AI that focuses on creating algorithms and statistical models that let computing machines learn from provided data and predict or make decisions based on the training data.

ML frees up programs from having to be explicitly programmed for every task it carries out. ML technologies can tap into historical data and identify trends and patterns that are often too subtle for human beings to figure out. Such ML-enabled machines can also optimize their performance over time. ML systems form the foundation of a wide range of applications like:

All these serve to enhance the operational efficiency of businesses like yours across a wide range of domains.

Like ML is a subset of AI, DL or Deep Learning is a subset or specialized branch of ML. DL uses multi-layered artificial neural networks (ANNs) to model and solve complex problems. DL is particularly effective in situations where businesses need to process a vast amount of unstructured text or image data.

Through DL, the data extraction process is automated, which lets the algorithms learn from raw data. This has facilitated breakthroughs in fields like Natural Language Processing, Computer Vision, and Speech Recognition. The high levels of computing power that modern chips provide have helped in the success and application of DL technologies.

Let’s now take a detailed look at the three technologies along with associated adoption strategies.

AI is transforming strategic decision-making processes by enhancing the scope and capability of making data-driven decisions. AI tools can automatically collect and analyze large sets of data, thereby providing you with invaluable insights and objective predictions. It is particularly noted for its effectiveness in industries where extensive amounts of data are involved and there is a strong need to make real-time decisions.

We have briefly discussed what AI is before, but we have not touched upon its scope. It is wrong to assume that the scope of AI is limited to automation. Not at all; AI applications also extend to fields like user interactions and data analysis. In short, AI fundamentally changes the way humans interact with technology.

AI is a broad field consisting of a wide range of promising cutting-edge technologies. ML is just one of them. Some other important branches that your business should take note of include:

NLP- Natural Language Processing (NLP) is a promising AI subset. With this technology machines can understand inputs and generate outputs in natural human language.

Robotics- The field of robotics integrates AI technologies with physical machines, resulting in the creation of artificial systems able to perform tasks autonomously.

Some other important AI subfields are computer vision, through which machines can interpret visual data and the creation of expert systems that are designed to emulate human expertise in particular domains.

While there are many AI models out there and new ones are being continuously developed with further research, some AI models have already gained high levels of popularity for the effectiveness and versatility of their uses. Some of them include:

LLM or Large Language Models are essentially sophisticated algorithms that use DL techniques to understand, generate, and manipulate text written in a human language. LLMs, as the name suggests, have been trained on vast datasets created from written texts, which provides them with the ability to predict upcoming words in sentences or generate entire contextually relevant and coherent paragraphs.

LLMs use the transformer network architecture, which helps in the creation of attention techniques that serve to enhance the models’ ability to process language. The attention is focused on relevant words or phrases throughout the input given to the model.

LLMs have a wide range of uses ranging from creating textual content, translating texts, summarization and even intelligent, human-like conversations. Such abilities give them a wide variety of use cases like creating content, customer service and analysis of legal documents. With continued development, LLMs are becoming increasingly able to make human-like responses. While that broadens the potential of innovation, it also comes with its set of ethical implications and biases arising from the training data.

We will talk a little bit more elaborately on decision trees, which we mentioned earlier. It is a popular form of supervised machine learning algorithm that helps in both regression and classification tasks. The structure of this model resembles a flowchart that branches off from the root node. The branches are created on the basis of particular features and it produces the final output in the leaf nodes. Branching tree models excel by being easy to interpret- visually laying down decision-making processes.

During their training period, decision trees use metrics like Gini impurity or entropy so that they can determine the best split at each node with the goal of creating homogeneous sub-groups. However, despite their simplicity, these models are vulnerable to overfitting when there are too many features or small datasets. In such cases, methods like pruning are used to make the data less complex and enhance the model’s generalization.

SVMs are a form of supervised learning model which works best for classification tasks. The basic concept through which it works is that it starts by identifying the optimal hyperplane separating the different classes present in the feature space. This helps to maximize support vectors or the margin between the closest points of different classes.

This model is put into use mostly in cases where there are high-dimensional spaces and linear data is involved. SVMs can effectively handle such linear data by using kernel functions that transform the data into a higher dimensional one.

Another common use case of SVMs is in regression. It works well with smaller amounts of data. Still, you need to tune the parameters carefully to achieve optimum performance and maintain a balance between bias and variance by preventing overfitting.

KNN or K-nearest Neighbour is a supervised learning algorithm characterized by its non-parametric nature used commonly both for regression and classification. It is attractive due to the simplicity achieved by its approach, which relies on the proximity of data points in a multi-dimensional space. When provided with a new data point, the algorithm works to identify the “k” number of near points based on a distance metric, which is usually the Euclidean distance. The model then proceeds to determine the prediction by considering the majority class in classification tasks or the average value in regression tasks of such neighbors.

Experts can easily and intuitively implement KNNs, but it is not efficient in terms of computational resources consumed. This is truer if there are large datasets involved as that involves the calculation of the distance from the new data point to all existing data points. Further, what you choose as the value of “k” can affect the model’s performance considerably. Some techniques of cross-validation can help in optimizing this “k” parameter.

This is an ensemble learning mechanism that creates multiple decision trees during the training process and outputs the prediction for classification use cases or the average for regression use cases. The model thus used makes it more robust and minimizes risks of overfitting associated with individual decision trees.

By bringing random factors into the feature selection process, the model ensures that different trees capture different facets of the data, ultimately resulting in greater predictive power.

One of the key features rooting for the model is its ability to handle both numerical and categorical features along with consistent performance, even in cases where data is missing. It lets your business collect insights into the importance of features which can help you further refine models and guide data collection efforts.

This is a fundamental method used in statistics that is used for modeling the relationship between dependent variables and one or multiple independent variables. This method assumes a linear relationship between the two variables, letting the model predict the value of the dependent variable based on the weighted sum of independent variables. It is essentially simple, which makes tasks like interpretations easy as the change in the dependent variable for a unit change in each independent variable is indicated by coefficients.

Also read our latest blog on Basics of AI to learn more about AI, its trends and models

Let’s now look at the scenario surrounding the development and implementation of ML.

ML can play a crucial role in your organization’s strategic decision-making abilities, helping them proactively adapt to evolving conditions and make the most of new opportunities. ML algorithms, when coupled with predictive analytics, can leverage large datasets to identify trends, dynamics, and competition in the market, thereby helping you to become more agile. It also helps mitigate risks through the evaluation of probable outcomes, paving the way for more resilient business and operations plans.

ML is characterized by its ability to execute tasks through the identification of patterns and autonomously enhance performance through the learning experience without the need for explicit programming for the same. Some of the major characteristics of ML include:

There are two primary types of Machine Learning, viz. Supervised and unsupervised. Let’s examine the two briefly:

In supervised machine learning, a model is trained on a labeled dataset here, the input and its corresponding outputs are paired. The aim of the algorithm in such cases is to learn the mapping process from inputs to outputs, which gives it the ability to predict outcomes for unknown data. Common examples of this type of ML are classification tasks like image recognition and spam detection.

On the other hand, unsupervised learning works with unlabeled data. Unsupervised ML models try to identify patterns and structures that are present in the training data itself. Examples of such activities are clustering, where similar data points are grouped, or to reduce dimensionality with the aim of simplifying datasets. Some applications of unsupervised learning include customer segmentation used in marketing and the detection of network security anomalies.

There are a variety of ML models that are relevant to the specific problem that needs to be addressed. Some common models are:

All these models serve to emphasize how diverse ML models are and their extensive applications across industries and domains to create solutions to a variety of complex problems. Now that we have covered, let’s delve into the most promising subset of ML- DL!

DL is a powerful tool for giving the productivity achieved through data-driven decisions and automation a boost. Systems based on DL technologies can predict patterns, streamline the process of resource allocation and improve scheduling through the analysis of past data.

DL uses multilayered neural networks or Deep Neural Networks (DNN) to perform a variety of AI-related tasks. One DL feature which makes it stand out from other AI technologies is its ability to automatically learn features from large datasets thereby giving it the power of representing data effectively without the need for human intervention. Such an ability lets the technology become proficient at handling unstructured audio, image, and text-based data. Besides that, DL models are also able to use both supervised and unsupervised machine learning methods effectively and can extract critically important patterns and relationships it derives from raw data.

The downside of DL models is the significant computational power they need for effective training and the high-performance GPUs necessary for them to function properly.

DL architectures are fundamentally based on neural networks. Such neural networks comprise interconnected nodes properly organized in layers. These nodes and their connections are intended to emulate the function of neurons.

The typical neural network consists of an input layer, one or more hidden layers, and an output layer. The way it basically functions is- that the input layer gathers the initial data, and the hidden layers then carry out complex transformations and feature extraction techniques and refine the data before it finally reaches the output layer.

Each connection in the neural network has a weight associated with it, which varies according to the training data. This has a profound impact on the emphasis placed on input features during associated computations. The behavior of individual nodes in such a network is determined by activation functions which decide if a node would be active based upon the input it receives.

Several DL models demonstrate their versatility, like:

Some AI models have already become common amongst business organizations like yours and individuals alike as they go about their day-to-day tasks in and out of the office. Let’s look at popular AI models in our comprehensive overview of AI/ML strategies!

AI and associated technologies are developing rapidly, but it brings with itself some reasons for great concern when it comes to ethics. While AI systems become more sophisticated, w4e often neglect the important ethical issues associated with the development of the same. The main ethical issues are as follows:

AI is all set to transform every aspect of modern life. AI systems are advancing at a swift pace, and AI systems will only become more intelligent and capable with time, revolutionizing a vast range of business industries and sectors. AI will play a greater role in the automation of crucial business processes and enhance the effectiveness of data-driven decision making. It is expected to both create jobs while laying off other workers. It is the brave new frontier of human innovation, and it is expected to develop in the following ways.

| Future Trends of AI and ML | Description |

| Explainable AI (XAI) | Tool that potentially have answers to questions like why and how it relates to AI systems and helpful in addressing legal and ethical concerns. |

| Multimodal AI | AI systems that have the potential to understand, analyze, and create information based on different types of data like text, audio, videos, and images |

| Gen AI | Engineered to potentially create human-like content across various formats like text, image, and video |

| Quantum Computing | Optimize ML speed and reduce execution time. |

| Big Model Creation | All-purpose model to perform tasks in various realms concurrently |

| Federated Learning | Allows ML algorithms to learn from decentralized data without involving the process of sensitive information exchange |

AI and ML are likely to advance rapidly in fields like computer vision, natural language processing, and autonomous systems. AI and ML applications are already transforming a vast set of industries- adding new abilities to their functions and enhancing their efficiency. Personalized healthcare solutions and optimized supply chain management are some of the diverse potential AI applications. AI is being used in the healthcare sector to help in diagnosis and relevant predictive analytics. Financial institutions are early adopters of AI technology, using its potential to detect fraud and assess risks more properly and accurately. Additionally, Generative AI has already shaken the world of content creation and design. With advancements in the development of AI technology, the ethical use of AI will take center stage, creating transparent, accountable, and humanized AI systems.

In conclusion, AI and ML strategies are transforming industries by enabling automation, enhancing decision-making, and driving innovation. AI emphases on creating systems that mimic human intelligence, while ML, a subset of AI, allows machines to learn from data without explicit programming. Organizations are leveraging these technologies to enhance customer experiences, optimize operations, and gain a competitive edge. Successful AI and ML strategies require a strong data foundation, skilled talent, and a focus on ethical considerations, such as bias mitigation and transparency.

With over 800 engineering projects spanning Data Centers, IoT, Machine Learning, and Data Analytics, Calsoft deliver exceptional results that drive success across diverse domains. Our expertise lies in big data analytics, traditional analytics, and Generative AI, focusing on enterprises and ISVs.